Agustina documentation

Repository used for BIFI's external documentation, available to all.

| Members | Contact |

|---|---|

| Daniel Martínez Cucalón | daniel.martinez@bifi.es |

| Sergio Martínez-Losa | sergio.martinez@bifi.es |

Procedimientos básicos en Agustina

Información acerca de los procedimientos de gestión en Agustina (English version at the end).

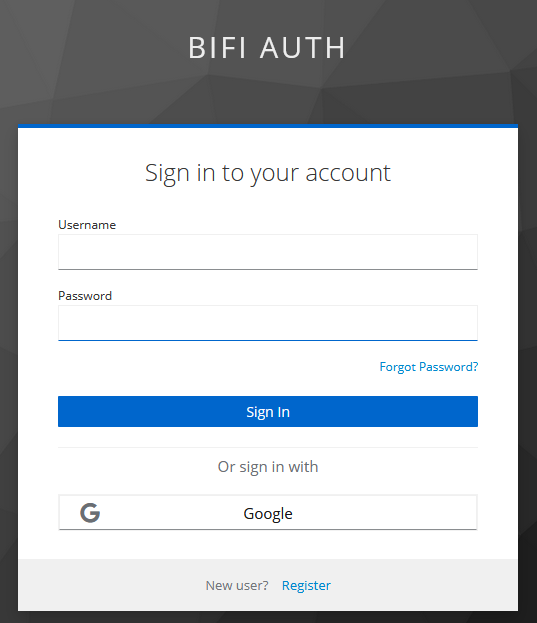

Solicitud de acceso al sistema

Para obtener un usuario del sistema Agustina es necesario llevar a cabo una serie de pasos que comprenden la creación de un proyecto, sus cuentas asociadas, activación de las mismas y vinculación al proyecto creado.

Los formularios a cumplimentar se encuentran en : https://soporte.bifi.unizar.es/forms/form.php

Ingreso en el sistema

El comando para acceder a Agustina por ssh es:

ssh id_usuario@agustina.bifi.unizar.es

Ejemplo:

ssh john.diaz@agustina.bifi.unizar.es

El usuario podrá acceder de este modo siempre y cuando tenga una dirección IP fija abierta en el firewall. En caso contrario, el acceso podrá hacerse a través de Bridge:

ssh id_usuario@bridge.bifi.unizar.es

y una vez hecho el login:

ssh id_usuario@agustina.bifi.unizar.es

Ejemplo:

ssh jdiazlag@bridge.bifi.unizar.es

ssh john.diaz@agustina.bifi.unizar.es

Editores de texto

El editor establecido por defecto es Vi, aunque pueden cargarse Emacs (module load emacs) o Vim (module load vim).

Uso del almacenamiento

Una vez hecho login en el sistema Agustina, el usuario dispondrá de un pequeño espacio (limitado por cuota) bajo su directorio home. Dicho espacio no está pensado para almacenar datos, ya que es muy reducido; su uso está orientado a guardar scripts, notas y elementos ligeros.

Cada usuario podrá acceder a espacio de almacenamiento scratch bajo sistema LUSTRE, con mayor capacidad y orientado a uso de trabajo más intensivo, ubicado en /fs/agustina/id_usuario

Ejemplo:

/fs/agustina/john.diaz/

Gestión de trabajos

IMPORTANTE Cualquier trabajo que se lance de forma local en los nodos de login será cancelado. Los jobs deben lanzarse contra el cluster.

Agustina se basa en un sistema Slurm de gestión de colas. El procedimiento normal para ejecutar trabajos se basa en la creación de un script indicando las características de la tarea y el uso del comando sbatch.

El sistema dispone de varias particiones:

PARTITION AVAIL TIMELIMIT NODES STATE NODELIST

agustina_fat up 7-00:00:00 3 idle afat[01-03]

agustina_thin* up 7-00:00:00 93 idle athin[01-93]

rasmia_hopper up 7-00:00:00 2 idle agpuh[01-03]

rasmia_ada up 7-00:00:00 9 idle agpul[01-09]

cierzo_thin up 7-00:00:00 78 idle czthin[01-79]

cierzo_fat up 7-00:00:00 1 idle czfat01

cierzo_kepler up 7-00:00:00 4 idle czgpuk[01-04]

full up 7-00:00:00 96 idle afat[01-03],athin[01-93],agpuh[01-03],agpul[01-09],czthin[01-79],czfat01, czgpuk[01-04]

RES up 7-00:00:00 9 idle afat01,athin[01-02,04-06,09,11-12,14-16,18-23,25-30]

Siendo agustina_thin la que comprende los nodos estándar de computación, agustina_fat la de nodos con el doble de memoria y full la totalidad de los nodos de cómputo. Por otra parte tenemos las particiones de GPU, las cuales corresponden a rasmia_hopper con GPUs H100 y rasmia_ada con GPUs L40S. Podremos comprobar su estado con el comando sinfo.

También se disponen de colas para ejecutar sobre la infraestructura cierzo (cierzo_thin y cierzo_fat), así como una cola de GPUs llamada cierzo_kepler, para más información consultar: https://cesar.unizar.es/hpc/.

Agustina está basado en procesadores AMD mientras que RasmIA y cierzo están basados en procesadores Intel.

IMPORTANTE: Los usuarios que usen horas de cálculo provenientes de la RES (Red Española de Supercomputación), deberán usar la partición RES.

Ejemplo helloWorld.sh para enviar a la cola de trabajo:

#!/bin/env bash

#SBATCH -J helloTest # job name

#SBATCH -o helloTest.o%j # output and error file name (%j expands to jobID)

#SBATCH -N 3 # total number of nodes

#SBATCH --ntasks-per-node=12 # number of cores per node (maximum 24)

#SBATCH -p agustina_thin # partition

echo "Hello world, I am running on node $HOSTNAME"

sleep 10

date

Para lanzar la tarea es imprescindible hacerlo asociándola a su proyecto correspondiente:

sbatch --account id_proyecto helloWorld.sh

De lo contrario, obtendremos un mensaje de error:

sbatch: error: QOSMaxSubmitJobPerUserLimit

sbatch: error: Batch job submission failed: Job violates accounting/QOS policy (job submit limit, user\'s size and/or time limits)

Podemos comprobar el estado de ejecución del script con el comando squeue.

Ejemplo:

[john.diaz@alogin02 slurmTest]$ sbatch --account proyecto_prueba helloWorld.sh

Submitted batch job 1707

[john.diaz@alogin02 slurmTest]$ squeue

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

1707 agustina_thin helloTes john.dia R 0:01 3 athin[49-51]

Existen otros comandos útiles para la gestión de trabajos:

Cancelación

scancel job_id

Información detallada

scontrol show job identificadorJob

IMPORTANTE: Los trabajos tienen una duración máxima en el sistema de una semana (7 días desde el lanzamiento del trabajo), por lo tanto, si el trabajo dura más de una semana el sistema de colas lo cancelará. Para evitar este hecho, el usuario deberá dividir los datos de entrada para lanzar trabajos con menos carga de procesado o paralelizar su software.

Información sobre códigos de estado: https://confluence.cscs.ch/display/KB/Meaning+of+Slurm+job+state+codes

Guía de usuario Slurm: https://slurm.schedmd.com/quickstart.html

Basic procedures on Agustina

Information about management procedures on Agustina.

Requesting access to the system

To obtain a user account on the Agustina system you must follow a series of steps that include creating a project, its associated accounts, activating them and linking them to the created project.

The forms to complete are available at: https://soporte.bifi.unizar.es/forms/form.php

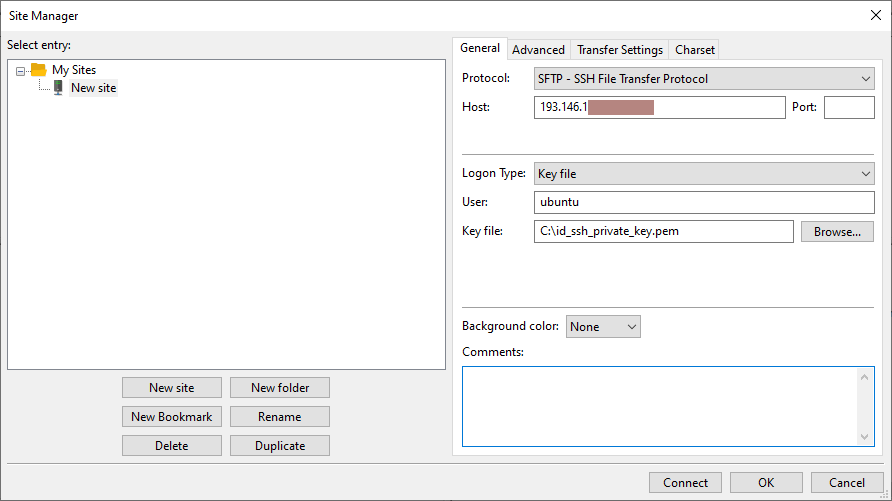

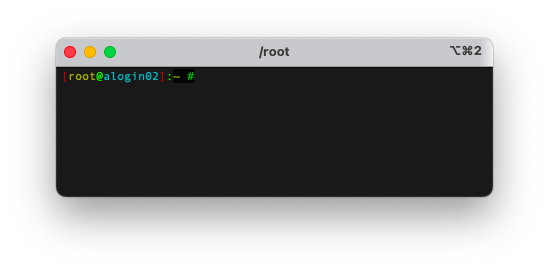

Logging in to the system

The command to access Agustina by SSH is:

ssh user_id@agustina.bifi.unizar.es

Example:

ssh john.diaz@agustina.bifi.unizar.es

A user can access this way provided they have a fixed IP address allowed through the firewall. Otherwise, access can be done via the Bridge:

ssh user_id@bridge.bifi.unizar.es

and once logged in:

ssh user_id@agustina.bifi.unizar.es

Example:

ssh jdiazlag@bridge.bifi.unizar.es

ssh john.diaz@agustina.bifi.unizar.es

Text editors

The default editor is Vi, although Emacs (module load emacs) or Vim (module load vim) modules can be loaded.

Storage usage

After logging into Agustina, the user will have a small quota-limited home directory. This space is not intended for data storage, as it is very limited; it is meant to store scripts, notes and small items.

Each user can access larger scratch storage on a LUSTRE filesystem for more intensive work, located at /fs/agustina/user_id

Example:

/fs/agustina/john.diaz/

Job management

IMPORTANT Any job launched directly on the login nodes will be cancelled. Jobs must be submitted to the cluster.

Agustina uses Slurm as the queuing system. The normal procedure to run jobs is to create a script specifying the job characteristics and submit it with sbatch.

The system provides several partitions:

PARTITION AVAIL TIMELIMIT NODES STATE NODELIST

agustina_fat up 7-00:00:00 3 idle afat[01-03]

agustina_thin* up 7-00:00:00 93 idle athin[01-93]

rasmia_hopper up 7-00:00:00 2 idle agpuh[01-03]

rasmia_ada up 7-00:00:00 9 idle agpul[01-09]

cierzo_thin up 7-00:00:00 78 idle czthin[01-79]

cierzo_fat up 7-00:00:00 1 idle czfat01

cierzo_kepler up 7-00:00:00 4 idle czgpuk[01-04]

full up 7-00:00:00 96 idle afat[01-03],athin[01-93],agpuh[01-03],agpul[01-09],czthin[01-79],czfat01, czgpuk[01-04]

RES up 7-00:00:00 9 idle afat01,athin[01-02,04-06,09,11-12,14-16,18-23,25-30]

agustina_thin contains the standard compute nodes, agustina_fat contains nodes with double memory, and full includes all compute nodes. GPU partitions are rasmia_hopper (H100 GPUs) and rasmia_ada (L40S GPUs). Check their status with sinfo.

There are also queues for the Cierzo infrastructure (cierzo_thin and cierzo_fat) and a GPU queue cierzo_kepler. For more information see: https://cesar.unizar.es/hpc/

Agustina nodes use AMD processors, while RasmIA and Cierzo use Intel processors.

IMPORTANT: Users using computing hours from the Spanish Supercomputing Network (RES) must use the RES partition.

Example helloWorld.sh to submit a job:

#!/bin/env bash

#SBATCH -J helloTest # job name

#SBATCH -o helloTest.o%j # output and error file name (%j expands to jobID)

#SBATCH -N 3 # total number of nodes

#SBATCH --ntasks-per-node=12 # number of cores per node (maximum 24)

#SBATCH -p agustina_thin # partition

echo "Hello world, I am running on node $HOSTNAME"

sleep 10

date

When submitting the job it is mandatory to associate it with the corresponding project account:

sbatch --account project_id helloWorld.sh

Otherwise you will get an error:

sbatch: error: QOSMaxSubmitJobPerUserLimit

sbatch: error: Batch job submission failed: Job violates accounting/QOS policy (job submit limit, user's size and/or time limits)

You can check the job status with squeue.

Example:

[john.diaz@alogin02 slurmTest]$ sbatch --account proyecto_prueba helloWorld.sh

Submitted batch job 1707

[john.diaz@alogin02 slurmTest]$ squeue

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

1707 agustina_thin helloTes john.dia R 0:01 3 athin[49-51]

Other useful job management commands:

Cancel a job:

scancel job_id

Detailed job information:

scontrol show job jobID

IMPORTANT: Jobs have a maximum duration of one week (7 days) in the system; if a job runs longer it will be cancelled. To avoid this, split input data to run shorter jobs or parallelize your software.

Information about Slurm job state codes: https://confluence.cscs.ch/display/KB/Meaning+of+Slurm+job+state+codes

Slurm user guide: https://slurm.schedmd.com/quickstart.html

Anaconda 2024

Anaconda 2024 is a free and open-source software distribution of the Python and R languages, used in data science and machine learning. It is aimed at simplifying the deployment and management of software packages.

WARNING: If you do not know how to create your own anaconda environment in agustina cluster, please read the instruction below, otherwise send and email with your request to agustina@bifi.es for further assistance.

Anaconda is useful to create your own conda environment in the /fs/agustina/USER folder. To do this, you can import your own yml file and create the environment with the following commands:

Usage

module load anaconda/2024

export CONDA_PKGS_DIRS=/fs/agustina/$(whoami)/.conda-pkgs

mkdir -p /fs/agustina/$(whoami)/conda-env

$ANACONDA3_HOME/bin/conda-env create --prefix /fs/agustina/$(whoami)/conda-env/my-conda-env --file /fs/agustina/$(whoami)/conda-env/my-environment-file.yml

source $ANACONDA3_HOME/bin/activate /fs/agustina/$(whoami)/conda-env/my-conda-env

Anaconda 2025

module load anaconda/2025

export CONDA_PKGS_DIRS=/fs/agustina/$(whoami)/.conda-pkgs

mkdir -p /fs/agustina/$(whoami)/conda-env

$ANACONDA3_HOME/bin/conda env create --prefix /fs/agustina/$(whoami)/conda-env/my-conda-env --file /fs/agustina/$(whoami)/conda-env/my-environment-file.yml

source $ANACONDA3_HOME/bin/activate /fs/agustina/$(whoami)/conda-env/my-conda-env

Once you have the environment ready and activated, you can more packages with pip install or conda install inside the environment, the you can create a bash script to launch the environment in agustina cluster:

Example script : test-anaconda.sh

#!/bin/bash

#SBATCH -J test-anaconda

#SBATCH -o test-anaconda-%j.msg

#SBATCH -e test-anaconda-%j.err

#SBATCH -p agustina_thin

#SBATCH -N 1

module load anaconda/2024

# activate environment

source $ANACONDA3_HOME/bin/activate /fs/agustina/$(whoami)/conda-env/my-conda-env

python -v

echo "done!!"

Submit with :

sbatch --account=your_project_ID test-anaconda.sh

Base file: my-environment-file.yml

name: base

channels:

- https://repo.anaconda.com/pkgs/main

- https://repo.anaconda.com/pkgs/r

- conda-forge

dependencies:

- _libgcc_mutex=0.1=conda_forge

- _openmp_mutex=4.5=2_gnu

- archspec=0.2.3=pyhd8ed1ab_0

- boltons=24.0.0=pyhd8ed1ab_0

- brotli-python=1.1.0=py312h2ec8cdc_2

- bzip2=1.0.8=h4bc722e_7

- c-ares=1.32.3=h4bc722e_0

- ca-certificates=2024.8.30=hbcca054_0

- certifi=2024.8.30=pyhd8ed1ab_0

- cffi=1.17.1=py312h06ac9bb_0

- charset-normalizer=3.4.0=pyhd8ed1ab_0

- colorama=0.4.6=pyhd8ed1ab_0

- conda=24.9.2=py312h7900ff3_0

- conda-libmamba-solver=24.9.0=pyhd8ed1ab_0

- conda-package-handling=2.4.0=pyh7900ff3_0

- conda-package-streaming=0.11.0=pyhd8ed1ab_0

- distro=1.9.0=pyhd8ed1ab_0

- fmt=10.2.1=h00ab1b0_0

- frozendict=2.4.6=py312h66e93f0_0

- h2=4.1.0=pyhd8ed1ab_0

- hpack=4.0.0=pyh9f0ad1d_0

- hyperframe=6.0.1=pyhd8ed1ab_0

- idna=3.10=pyhd8ed1ab_0

- jsonpatch=1.33=pyhd8ed1ab_0

- jsonpointer=3.0.0=py312h7900ff3_1

- keyutils=1.6.1=h166bdaf_0

- krb5=1.21.3=h659f571_0

- ld_impl_linux-64=2.43=h712a8e2_2

- libarchive=3.7.4=hfca40fe_0

- libcurl=8.10.1=hbbe4b11_0

- libedit=3.1.20191231=he28a2e2_2

- libev=4.33=hd590300_2

- libexpat=2.6.4=h5888daf_0

- libffi=3.4.2=h7f98852_5

- libgcc=14.2.0=h77fa898_1

- libgcc-ng=14.2.0=h69a702a_1

- libgomp=14.2.0=h77fa898_1

- libiconv=1.17=hd590300_2

- libmamba=1.5.9=h4cc3d14_0

- libmambapy=1.5.9=py312h7fb9e8e_0

- libnghttp2=1.64.0=h161d5f1_0

- libnsl=2.0.1=hd590300_0

- libsolv=0.7.30=h3509ff9_0

- libsqlite=3.47.0=hadc24fc_1

- libssh2=1.11.0=h0841786_0

- libstdcxx=14.2.0=hc0a3c3a_1

- libstdcxx-ng=14.2.0=h4852527_1

- libuuid=2.38.1=h0b41bf4_0

- libxcrypt=4.4.36=hd590300_1

- libxml2=2.13.4=h064dc61_2

- libzlib=1.3.1=hb9d3cd8_2

- lz4-c=1.9.4=hcb278e6_0

- lzo=2.10=hd590300_1001

- mamba=1.5.9=py312h9460a1c_0

- menuinst=2.2.0=py312h7900ff3_0

- ncurses=6.5=he02047a_1

- openssl=3.4.0=hb9d3cd8_0

- packaging=24.2=pyhd8ed1ab_0

- pip=24.3.1=pyh8b19718_0

- platformdirs=4.3.6=pyhd8ed1ab_0

- pluggy=1.5.0=pyhd8ed1ab_0

- pybind11-abi=4=hd8ed1ab_3

- pycosat=0.6.6=py312h98912ed_0

- pycparser=2.22=pyhd8ed1ab_0

- pysocks=1.7.1=pyha2e5f31_6

- python=3.12.7=hc5c86c4_0_cpython

- python_abi=3.12=5_cp312

- readline=8.2=h8228510_1

- reproc=14.2.4.post0=hd590300_1

- reproc-cpp=14.2.4.post0=h59595ed_1

- requests=2.32.3=pyhd8ed1ab_0

- ruamel.yaml=0.18.6=py312h66e93f0_1

- ruamel.yaml.clib=0.2.8=py312h66e93f0_1

- setuptools=75.3.0=pyhd8ed1ab_0

- tk=8.6.13=noxft_h4845f30_101

- tqdm=4.67.0=pyhd8ed1ab_0

- truststore=0.10.0=pyhd8ed1ab_0

- tzdata=2024b=hc8b5060_0

- urllib3=2.2.3=pyhd8ed1ab_0

- wheel=0.45.0=pyhd8ed1ab_0

- xz=5.2.6=h166bdaf_0

- yaml-cpp=0.8.0=h59595ed_0

- zstandard=0.23.0=py312hef9b889_1

- zstd=1.5.6=ha6fb4c9_0

prefix:

More info :

Autodock-Vina

AutoDock Vina is an open-source program for doing molecular docking. It was originally designed and implemented by Dr. Oleg Trott in the Molecular Graphics Lab (now CCSB) at The Scripps Research Institute.

Usage

Example script : testAutodock.sh

#!/bin/bash

#

#SBATCH -J testAutodock # job name

#SBATCH -o testAutodock.o%j # output and error file name (%j expands to jobID)

#SBATCH -e testAutodock.e%j # output and error file name (%j expands to jobID)

#SBATCH -n 2

#SBATCH -p agustina_thin

module load vina/1.2.5

vina_1.2.5_linux_x86_64 --config conf.txt --out out-10modes.pdbqt --exhaustiveness 8 --cpu 2

Submit with :

sbatch --account=your_project_ID testAutodock.sh

More info :

- https://vina.scripps.edu/manual/

- https://github.com/sha256feng/Autodock-vina-example?tab=readme-ov-file

Bedtools 2.31.0

Collectively, the bedtools utilities are a swiss-army knife of tools for a wide-range of genomics analysis tasks. The most widely-used tools enable genome arithmetic: that is, set theory on the genome. For example, bedtools allows one to intersect, merge, count, complement, and shuffle genomic intervals from multiple files in widely-used genomic file formats such as BAM, BED, GFF/GTF, VCF. While each individual tool is designed to do a relatively simple task (e.g., intersect two interval files), quite sophisticated analyses can be conducted by combining multiple bedtools operations on the UNIX command line.

Bedtools is developed in the Quinlan laboratory at the University of Utah and benefits from fantastic contributions made by scientists worldwide.

Usage

Example script : test-bedtools.sh

#!/bin/bash

#SBATCH -J bedtools-test

#SBATCH -e bedtools-test%j.err

#SBATCH -o bedtools-test%j.msg

#SBATCH -p agustina_thin # queue (partition)

# load bedtools

module load bedtools/2.31.0

# list bedtools root folder

ls -al $BEDTOOLS_ROOT

bedtools --help

echo "DONE!"

Submit with :

sbatch --account=your_project_ID test-bedtools.sh

More info :

Biogenomics environment with Anaconda 2024

Biogenomics environment with Anaconda 2024 is a custom Anaconda 2024 environment with ready to use tools for Biogenomic research. The apps included are:

- FastQC

- MultiQC

- Bowtie2

- Bismark

- Trim-galore

- Bedtools

- Samtools

WARNING: If you do not know how to create your own anaconda environment in agustina cluster, please read the instruction below, otherwise send and email with your request to agustina@bifi.es for further assistance.

IMPORTANT: If you want to update or install new programs on this conda environment please contact support at agustina@bifi.es for further assistance, pointing out the programs to install or update with the version needed.

Usage

Example script : test-biogenomics.sh

#!/bin/bash

#SBATCH --job-name=test-biogenomics

#SBATCH --output=test-biogenomics-%j.msg

#SBATCH --error=test-biogenomics-%j.err

#SBATCH -p agustina_thin

#SBATCH -N 1

export CRYPTOGRAPHY_OPENSSL_NO_LEGACY=1

module load genomics/2024

echo "FASTQC"

fastqc --help

echo "MULTIQC"

multiqc --help

echo "BOWTIE2"

bowtie2 --help

echo "BISMARK"

bismark --help

echo "TRIM-GALORE"

trim-galore --help

echo "BEDTOOLS"

bedtools --help

echo "SAMTOOLS"

samtools --help

echo "DONE!!!!"

Submit with :

sbatch --account=your_project_ID test-biogenomics.sh

More info :

Comsol

Create physics-based models and simulation applications with this software platform. The Model Builder enables you to combine multiple physics in any order for simulations of real-world phenomena. The Application Builder gives you the tools to build your own simulation apps. The Model Manager is a modeling and simulation management tool.

Usage

Example script : testComsol.sh

#!/bin/bash

#

#SBATCH -J a00P1TMax80ay220d300 # job name

#SBATCH -o a00P1TMax80ay220d300.o%j # output and error file name (%j expands to jobID)

#SBATCH --nodes 2

#SBATCH --exclusive

#SBATCH -p agustina_thin

module load comsol/6.1

comsol batch -inputfile a00P1TMax80ay220d300.mph -outputfile outa00P1TMax80ay220d300.mph -batchlog loga00P1TMax80ay220d300.txt

Submit with :

sbatch --account=your_project_ID testComsol.sh

More info :

CP2K

CP2K is a quantum chemistry and solid state physics software package that can perform atomistic simulations of solid state, liquid, molecular, periodic, material, crystal, and biological systems. CP2K provides a general framework for different modeling methods such as DFT using the mixed Gaussian and plane waves approaches GPW and GAPW. Supported theory levels include DFTB, LDA, GGA, MP2, RPA, semi-empirical methods (AM1, PM3, PM6, RM1, MNDO, …), and classical force fields (AMBER, CHARMM, …). CP2K can do simulations of molecular dynamics, metadynamics, Monte Carlo, Ehrenfest dynamics, vibrational analysis, core level spectroscopy, energy minimization, and transition state optimization using NEB or dimer method.

Usage

Example script : testCp2k.sh

#!/bin/bash

#SBATCH -e cp2k_test%j.err

#SBATCH -o cp2k_test%j.msg

#SBATCH -p agustina_thin

#SBATCH -N 4

#SBATCH --ntasks-per-node=24

module load cp2k/2024.1

cp2k --help

cp2k --version

Submit with :

sbatch --account=your_project_ID testCp2k.sh

More info :

CUDA 12.0

CUDA is a proprietary parallel computing platform and application programming interface that allows software to use certain types of graphics processing units for accelerated general-purpose processing, an approach called general-purpose computing on GPUs.

IMPORTANT: If you make use of GPU queues, it is mandatory to select a minimum number of CPU cores given by 16 cores/GPU multiplied by the amount of nodes selected and the number of selected GPUs (16 cores/gpu * num. GPUs * num. nodes).

Usage

Example script : test-cuda.sh

#!/bin/bash

#SBATCH -J cuda-test # job name

#SBATCH -o cuda-test.o%j # output and error file name (%j expands to jobID)

#SBATCH -p rasmia_ada # queue L40S (partition)

#SBATCH -N 2 # total number of nodes

#SBATCH --gres=gpu:1 # gpus per node

#SBATCH --cpus-per-task=32 # 16 cores por GPU * 1 GPU * 2 nodos

module load cuda/12.0

echo $CUDA12_HOME

echo $CUDA12_BIN

echo $CUDA12_LIB64

echo $CUDA12_INCLUDE

$CUDA12_BIN/nvcc --version

echo "done!!"

The informative report will be shown un the job output file.

Submit with :

sbatch --account=your_project_ID test-cuda.sh

More info :

CREST

CREST was developed as a utility and driver program for the semiempirical quantum chemistry package xtb. The programs name originated as an abbreviation for Conformer–Rotamer Ensemble Sampling Tool as it was developed as a program for conformational sampling at the extended tight-binding level GFN-xTB. Since then several functionalities have been added to the code. In its current state, the program provides a variety of sampling procedures, for example for improved thermochemistry, or explicit solvation.

Usage

Example script : testCrest.sh

#!/bin/bash

#SBATCH -e crest_test%j.err

#SBATCH -o crest_test%j.msg

#SBATCH -p agustina_thin

#SBATCH -N 4

#SBATCH --ntasks-per-node=24

module load crest/3.0.1

crest --help

crest --version

Submit with :

sbatch --account=your_project_ID testCrest.sh

More info :

DIA-NN

DIA-NN - a universal software suite for data-independent acquisition (DIA) proteomics data processing. Conceived at the University of Cambridge, UK, in the laboratory of Kathryn Lilley (Cambridge Centre for Proteomics), DIA-NN opened a new chapter in proteomics, introducing a number of algorithms which enabled reliable, robust and quantitatively accurate large-scale experiments using high-throughput methods. DIA-NN is currently being further developed in the laboratory of Vadim Demichev at the Charité (University Medicine Berlin, Germany).

Installed version: DIA-NN v1.8.1

Usage

Example script : testDIANN.sh

#!/bin/bash

#SBATCH -e diann_test %j.err

#SBATCH -o diann_test%j.msg

#SBATCH -p agustina_thin

#SBATCH -N 1

#SBATCH --ntasks-per-node=1

module load diann/1.8.1

$DIANN_HOME/diann-1.8.1 -h

Submit with :

sbatch --account=your_project_ID testDIANN.sh

More info :

Filogenics environment with Anaconda 2024

Filogenics environment with Anaconda 2024 is a custom Anaconda 2024 environment with ready to use tools for Filogenic research. The apps included are:

- FastQC

- fastp

- AdapterRemoval

- bwa

- Samtools

- mapDamage

- RAxML

- IQTREE2

- MrBayes

- Beast2

WARNING: If you do not know how to create your own anaconda environment in agustina cluster, please read the instruction below, otherwise send and email with your request to agustina@bifi.es for further assistance.

IMPORTANT: If you want to update or install new programs on this conda environment please contact support at agustina@bifi.es for further assistance, pointing out the programs to install or update with the version needed.

Usage

Example script : test-filogenics.sh

#!/bin/bssh

#SBATCH -J test-filogenics

#SBATCH --output=test-filogenics-%j.out

#SBATCH --error=test-filogenics-%j.err

#SBATCH -p agustina_thin

#SBATCH -N 1

export CRYPTOGRAPHY_OPENSSL_NO_LEGACY=1

module load filogenics/2024

# FastQC#

fastqc --help

# fastp

fastp --help

# AdapterRemoval

AdapterRemoval --help

# bwa

bwa

# Samtools

samtools --help

# mapDamage

mapDamage --help

# RAxML

raxmlHPC -h

# IQTREE2

iqtree2 --help

# MrBayes

mb -h

# Beast2

beast -version

Submit with :

sbatch --account=your_project_ID test-filogenics.sh

More info :

FHI-aims

FHI-aims is an all-electron electronic structure code based on numeric atom-centered orbitals. It enables first-principles simulations with very high numerical accuracy for production calculations, with excellent scalability up to very large system sizes (thousands of atoms) and up to very large, massively parallel supercomputers (ten thousand CPU cores).

Usage

Example script : testFHI.sh

#!/bin/bash

# Request 2 nodes with 128 MPI tasks per node for 20 minutes

#SBATCH --job-name=FHI-aims

#SBATCH --nodes=3

#SBATCH --ntasks-per-node=2

#SBATCH --cpus-per-task=1

#SBATCH --mem-per-cpu=64GB

#SBATCH -p agustina_thin

ulimit -s unlimited

module purge

module load gnu12/12.2.0

module load openmpi4/4.1.4

module load hwloc/2.7.0

module load fhi-aims/240507

mpirun aims.240507.scalapack.mpi.x

In addition to the script for launching the task, it is necessary to provide the input data in the files control.in and geometry.in

Submit with :

sbatch --account=your_project_ID testFHI.sh

More info :

Gaussian

Gaussian 16 is the latest in the Gaussian series of programs. It provides state-of-the-art capabilities for electronic structure modeling. Gaussian 16 is licensed for a wide variety of computer systems. All versions of Gaussian 16 contain every scientific/modeling feature, and none imposes any artificial limitations on calculations other than your computing resources and patience.

Usage

Example script : testGaussian.sh

#!/bin/bash

#SBATCH -e TSHX2_boro_NBOs%j.err

#SBATCH -o TSHX2_boro_NBOs%j.msg

#SBATCH -p agustina_thin

#SBATCH -N 4

#SBATCH --ntasks-per-node=24

module load gaussian/g16

g16 < TSHX2_boro_NBOs.gjf

Submit with :

sbatch --account=your_project_ID testGaussian.sh

Users can also make use of Gaussian 09, for legacy purposes, indeed this module could be loaded as:

module load gaussian/g09

Usage:

Example script : testGaussian.sh

#!/bin/bash

#SBATCH -e TSHX2_boro_NBOs%j.err

#SBATCH -o TSHX2_boro_NBOs%j.msg

#SBATCH -p agustina_thin

#SBATCH -N 4

#SBATCH --ntasks-per-node=24

module load gaussian/g09

g09 < TSHX2_boro_NBOs.gjf

Submit with :

sbatch --account=your_project_ID testGaussian.sh

More info :

Gimic

This is the GIMIC program for calculating magnetically induced currents in molecules. For this program produce any kind of useful information, you need to provide it with an AO density matrix and three (effective) magnetically perturbed AO density matrices in the proper format. Currently only recent versions of ACES2 (CFOUR), Turbomole, QChem, LSDalton, FERMION++, Gaussian can produce these matrices. Dalton is in the works.If you would like to add your favourite program to the list please use the source, Luke.

Usage

Example script : testGimic.sh

#!/bin/bash

#SBATCH -J testGimic # job name

#SBATCH -N 1 # total number of nodes

#SBATCH --cpus-per-task=2

#SBATCH --output=testGimig-%j.out #output file (%j expands to jobID)

#SBATCH --error=testGimic-%j.err #error file (%j expands to jobID)

#SBATCH --mem-per-cpu=16G

#SBATCH -p agustina_thin # partition

module load gimic/2.2.1

gimic > gimic.out

Launched from gimic-master/examples/benzene/3D directory.

More info :

- https://gimic.readthedocs.io/en/latest/index.html

- https://codeload.github.com/qmcurrents/gimic/zip/refs/heads/master

Golang 1.9

Build simple, secure, scalable systems with Go.

- An open-source programming language supported by Google

- Easy to learn and great for teams

- Built-in concurrency and a robust standard library

- Large ecosystem of partners, communities, and tools

Usage

Example script : testGolang.sh

#!/bin/bash

#SBATCH -e golang_test%j.err

#SBATCH -o golang_test%j.msg

#SBATCH -p agustina_thin

#SBATCH -N 4

#SBATCH --ntasks-per-node=24

module load golang/1.9

go --help

go version

Submit with :

sbatch --account=your_project_ID testGolang.sh

More info :

Gromacs

Gromacs. A free and open-source software suite for high-performance molecular dynamics and output analysis. There are two versions of gromacs 2024.5, the CPU version and de GPU version.

Usage CPU version

Example script : test-gromacs-cpu.sh

#!/bin/sh

#SBATCH --job-name=test-gromacs-cpu

#SBATCH --output=test-gromacs-cpu-%j.out

#SBATCH --error=test-gromacs-cpu-%j.err

#SBATCH -p agustina_thin

#SBATCH -N 1

#SBATCH --ntasks-per-node=16

# load CPU version

module load gromacs/gromacs-2024.5_cpu

source $GROMACS_BIN/GMXRC

export OMP_NUM_THREADS=16

mpirun $GROMACS_BIN/./gmx_mpi --help

echo "done!"

Submit with :

sbatch --account=your_project_ID test-gromacs-cpu.sh

Usage GPU version

Example script : test-gromacs-gpu.sh

#!/bin/sh

#SBATCH --job-name=test-gromacs-gpu

#SBATCH --output=test-gromacs-gpu-%j.out

#SBATCH --error=test-gromacs-gpu-%j.err

#SBATCH -p rasmia_ada

#SBATCH -N 1

#SBATCH --ntasks-per-node=16

#SBATCH --gres=gpu:4

# load gromacs with GPU support

module load gromacs/gromacs-2024.5_gpu

source $GROMACS_BIN/GMXRC

nvidia-smi -L

export CUDA_VISIBLE_DEVICES=0,1,2,3

export OMP_NUM_THREADS=16

export GMX_ENABLE_DIRECT_GPU_COMM=1

export GMX_FORCE_UPDATE_DEFAULT_GPU=true

mpirun $GROMACS_BIN/./gmx_mpi --help

echo "done!"

Submit with :

sbatch --account=your_project_ID test-gromacs-gpu.sh

Gromacs 2020.5

Example script : test-gromacs-cpu.sh

#!/bin/sh

#SBATCH --job-name=test-gromacs-cpu

#SBATCH --output=test-gromacs-cpu-%j.out

#SBATCH --error=test-gromacs-cpu-%j.err

#SBATCH -p agustina_thin

#SBATCH -N 1

#SBATCH --ntasks-per-node=16

# load CPU version

module load gromacs/gromacs-2020.5_cpu

export OMP_NUM_THREADS=16

source $GROMACS_BIN/GMXRC

#source $GROMACS_SINGLE_BIN/GMXRC # or source $GROMACS_BIN/GMXRC

#source $GROMACS_DOUBLE_BIN/GMXRC

# also available for single and double precission

# $GROMACS_SINGLE_BIN/./gmx_mpi --help

# $GROMACS_DOUBLE_BIN/./gmx_mpi_d --help

mpirun $GROMACS_BIN/./gmx_mpi --help

echo "done!"

Submit with :

sbatch --account=your_project_ID test-gromacs-cpu.sh

Example script : test-gromacs-gpu.sh

#!/bin/sh

#SBATCH --job-name=test-gromacs-gpu

#SBATCH --output=test-gromacs-gpu-%j.out

#SBATCH --error=test-gromacs-gpu-%j.err

#SBATCH -p rasmia_ada

#SBATCH -N 1

#SBATCH --ntasks-per-node=16

#SBATCH --gres=gpu:4

# load gromacs with GPU support

module load gromacs/gromacs-2020.5_gpu

source $GROMACS_BIN/GMXRC

nvidia-smi -L

export CUDA_VISIBLE_DEVICES=0,1,2,3

export OMP_NUM_THREADS=16

export GMX_ENABLE_DIRECT_GPU_COMM=1

export GMX_FORCE_UPDATE_DEFAULT_GPU=true

mpirun $GROMACS_BIN/./gmx_mpi --help

echo "done!"

Submit with :

sbatch --account=your_project_ID test-gromacs-gpu.sh

More info :

Hisat 2.2.1

Hisat2 is a fast and sensitive alignment program for mapping next-generation sequencing reads (both DNA and RNA) to a population of human genomes as well as to a single reference genome. Based on an extension of BWT for graphs (Sirén et al. 2014), we designed and implemented a graph FM index (GFM), an original approach and its first implementation. In addition to using one global GFM index that represents a population of human genomes, HISAT2 uses a large set of small GFM indexes that collectively cover the whole genome. These small indexes (called local indexes), combined with several alignment strategies, enable rapid and accurate alignment of sequencing reads. This new indexing scheme is called a Hierarchical Graph FM index (HGFM).

Usage

Example script : test-hisat.sh

#!/bin/bash

#SBATCH -J hisat-test

#SBATCH -e hisat-test%j.err

#SBATCH -o hisat-test%j.msg

#SBATCH -p agustina_thin # queue (partition)

# load hisat

module load hisat/2.2.1

# list hisat root folder

ls -al $HISAT_ROOT

hisat2 --help

echo "DONE!"

Submit with :

sbatch --account=your_project_ID test-hisat.sh

More info :

Julia

The Julia Programming Language.

Usage

Example script : testJulia.sh

#!/bin/bash

#SBATCH -e julia_test%j.err

#SBATCH -o julia_test%j.msg

#SBATCH -p agustina_thin

#SBATCH -N 4

#SBATCH --ntasks-per-node=24

module load julia/1.10.3

julia --help

julia --version

Submit with :

sbatch --account=your_project_ID testJulia.sh

More info :

llama.cpp

llama.cpp is an open source software library that performs inference on various large language models such as Llama. It is co-developed alongside the GGML project, a general-purpose tensor library. Inference of Meta's LLaMA model (and others) in pure C/C++. Command-line tools are included with the library.

Usage

llama.cpp has been compiled with GPU support, it is available for GPU queues ADA and Hopper.

Available llama.cpp versions:

- llama.cpp v1 (b4234)

- llama.cpp v1 (b4706)

Example script : test-llamacpp-hpc.sh

#!/bin/bash

#SBATCH -J llamacpp-gpu-test

#SBATCH -e llamacpp-test%j.err

#SBATCH -o llamacpp-test%j.msg

#SBATCH -p rasmia_ada # queue (partition)

#SBATCH --nodelist=agpul08

#SBATCH --gres=gpu:1

#module load llama.cpp/b4234

module load llama.cpp/b4706

BASE_PATH=/fs/agustina/$(whoami)/llamacpp

MODELS_PATH=$BASE_PATH/models

mkdir -p $MODELS_PATH

if [ -f "$MODELS_PATH/tiny-vicuna-1b.q5_k_m.gguf" ]; then

echo "Model tiny-vicuna-1b.q5_k_m.gguf exists"

else

echo "Model tiny-vicuna-1b.q5_k_m.gguf does not exist, downloading now..."

# model download

wget https://huggingface.co/afrideva/Tiny-Vicuna-1B-GGUF/resolve/main/tiny-vicuna-1b.q5_k_m.gguf -P $MODELS_PATH

fi

mkdir -p $BASE_PATH/answers

PROMPT='I think the meaning of life is'

# use llama.cpp client

$LLAMACPP_BIN/./llama-cli --help

$LLAMACPP_BIN/./llama-cli --version

$LLAMACPP_BIN/./llama-cli --list-devices

$LLAMACPP_BIN/./llama-cli -m $MODELS_PATH/tiny-vicuna-1b.q5_k_m.gguf \

-p "$PROMPT" \

--n-predict 128 \

--n-gpu-layers -1 \

-dev CUDA0 > $BASE_PATH/answers/llama-cli-answer.txt

cat $BASE_PATH/answers/llama-cli-answer.txt

# list llama.cpp directory to find out more tools

ls -al $LLAMACPP_BIN

echo "DONE!"

Submit with :

sbatch --account=your_project_ID test-llamacpp-hpc.sh

More info :

llama.cpp Bindings

llama.cpp bindings are the python bindings to use llama.cpp. Simple Python bindings for llama.cpp library.

Usage

Example script : test-llamacpp-bindings.sh

#!/bin/bash

#SBATCH -J test-llamacpp-bindings

#SBATCH -e test-llamacpp-bindings-%j.err

#SBATCH -o test-llamacpp-bindings-%j.msg

#SBATCH -p rasmia_hopper # queue (partition)

#SBATCH --nodelist=agpuh03 # select one node

#SBATCH --gres=gpu:1 # select one GPU

module load llama-cpp-python/0.3.1

export SSL_CERT_FILE=/etc/ssl/certs/ca-bundle.trust.crt

pip install typing-extensions diskcache certifi

# check available GPUs

nvidia-smi

python test-llamacpp-bindings.py

echo "DONE!"

Submit with :

sbatch --account=your_project_ID test-llamacpp-bindings.sh

Example file : test-llamacpp-bindings.py

import os

import urllib.request

from llama_cpp import Llama

def download_file(file_link, filename):

# Checks if the file already exists before downloading

if not os.path.isfile(filename):

urllib.request.urlretrieve(file_link, filename)

print("File downloaded successfully.")

else:

print("File already exists.")

# Dowloading GGML model from HuggingFace

ggml_model_path = "https://huggingface.co/TheBloke/zephyr-7B-beta-GGUF/resolve/main/zephyr-7b-beta.Q4_0.gguf"

filename = "zephyr-7b-beta.Q4_0.gguf"

download_file(ggml_model_path, filename)

llm = Llama(model_path="zephyr-7b-beta.Q4_0.gguf", n_ctx=512, n_batch=126, n_gpu_layers=-1)

def generate_text(

prompt="Who is the CEO of Apple?",

max_tokens=256,

temperature=0.1,

top_p=0.5,

echo=False,

stop=["#"],

):

output = llm(

prompt,

max_tokens=max_tokens,

temperature=temperature,

top_p=top_p,

echo=echo,

stop=stop,

)

output_text = output["choices"][0]["text"].strip()

return output_text

def generate_prompt_from_template(input):

chat_prompt_template = f"""<|im_start|>system

You are a helpful chatbot.<|im_end|>

<|im_start|>user

{input}<|im_end|>"""

return chat_prompt_template

prompt = generate_prompt_from_template(

"Compose an engaging travel blog post about a recent trip to Hawaii, highlighting cultural experiences and must-see attractions."

)

print(generate_text(

prompt,

max_tokens=356,

))

More info :

Miniconda3

Miniconda is a free, miniature installation of Anaconda Distribution that includes only conda, Python, the packages they both depend on, and a small number of other useful packages.

WARNING: If you do not know how to create your own miniconda environment in agustina cluster, please read the instruction below, otherwise send and email with your request to agustina@bifi.es for further assistance.

Miniconda is useful to create your own conda environment in the /fs/agustina/USER folder. To do this, you can import your own yml file and create the environment with the following commands:

Usage

module load miniconda3/3.12

export CONDA_PKGS_DIRS=/fs/agustina/$(whoami)/.conda-pkgs

mkdir -p /fs/agustina/$(whoami)/conda-env

$MINICONDA3_HOME/bin/conda-env create --prefix /fs/agustina/$(whoami)/conda-env/my-conda-env --file /fs/agustina/$(whoami)/conda-env/my-environment-file.yml

source $MINICONDA3_HOME/bin/activate /fs/agustina/$(whoami)/conda-env/my-conda-env

Once you have the environment ready and activated, you can more packages with pip install inside the environment, the you can create a bash script to launch the environment in agustina cluster:

Example script : test-miniconda.sh

#!/bin/bash

#SBATCH -J test-miniconda

#SBATCH -o test-miniconda-%j.msg

#SBATCH -e test-miniconda-%j.err

#SBATCH -p agustina_thin

#SBATCH -N 1

module load miniconda3/3.12

# activate environment

source $MINICONDA3_HOME/bin/activate /fs/agustina/$(whoami)/conda-env/my-conda-env

python -v

echo "done!!"

Submit with :

sbatch --account=your_project_ID test-miniconda.sh

Base file: my-environment-file.yml

name:

channels:

- defaults

dependencies:

- _libgcc_mutex=0.1=main

- _openmp_mutex=5.1=1_gnu

- blas=1.0=mkl

- bzip2=1.0.8=h5eee18b_6

- ca-certificates=2024.9.24=h06a4308_0

- expat=2.6.3=h6a678d5_0

- intel-openmp=2023.1.0=hdb19cb5_46306

- ld_impl_linux-64=2.40=h12ee557_0

- libffi=3.4.4=h6a678d5_1

- libgcc-ng=11.2.0=h1234567_1

- libgomp=11.2.0=h1234567_1

- libmpdec=4.0.0=h5eee18b_0

- libstdcxx-ng=11.2.0=h1234567_1

- libuuid=1.41.5=h5eee18b_0

- mkl=2023.1.0=h213fc3f_46344

- mkl-service=2.4.0=py313h5eee18b_1

- mkl_fft=1.3.11=py313h5eee18b_0

- mkl_random=1.2.8=py313h06d7b56_0

- ncurses=6.4=h6a678d5_0

- numpy=2.1.3=py313hf4aebb8_0

- numpy-base=2.1.3=py313h3fc9231_0

- openssl=3.0.15=h5eee18b_0

- pip=24.2=py313h06a4308_0

- python=3.13.0=hf623796_100_cp313

- python_abi=3.13=0_cp313

- readline=8.2=h5eee18b_0

- setuptools=72.1.0=py313h06a4308_0

- sqlite=3.45.3=h5eee18b_0

- tbb=2021.8.0=hdb19cb5_0

- tk=8.6.14=h39e8969_0

- tzdata=2024b=h04d1e81_0

- xz=5.4.6=h5eee18b_1

- zlib=1.2.13=h5eee18b_1

- pip:

- alabaster==1.0.0

- babel==2.16.0

- certifi==2024.8.30

- charset-normalizer==3.4.0

- contourpy==1.3.1

- cycler==0.12.1

- docutils==0.21.2

- fonttools==4.55.0

- idna==3.10

- imagesize==1.4.1

- jinja2==3.1.4

- kiwisolver==1.4.7

- markupsafe==3.0.2

- matplotlib==3.9.2

- packaging==24.2

- pillow==11.0.0

- pygments==2.18.0

- pyparsing==3.2.0

- python-dateutil==2.9.0.post0

- requests==2.32.3

- scipy==1.14.1

- six==1.16.0

- snowballstemmer==2.2.0

- sphinx==8.1.3

- sphinxcontrib-applehelp==2.0.0

- sphinxcontrib-devhelp==2.0.0

- sphinxcontrib-htmlhelp==2.1.0

- sphinxcontrib-jsmath==1.0.1

- sphinxcontrib-qthelp==2.0.0

- sphinxcontrib-serializinghtml==2.0.0

- urllib3==2.2.3

- wheel==0.38.1

More info :

MUMAX3-cQED 1.0

Mumax3-cQED: like Mumax3 but for a magnet coupled to a cavity. This is a fork of the micromagnetic simulation open source software mumax3. Mumax3-cQED, enhances mumax3 by including the effect of coupling the magnet to an electromagnetic cavity.

IMPORTANT: If you make use of GPU queues, it is mandatory to select a minimum number of CPU cores given by 16 cores/GPU multiplied by the amount of nodes selected and the number of selected GPUs (16 cores/gpu * num. GPUs * num. nodes).

Usage

Example script : test-mumax.sh

#!/bin/bash

#SBATCH -o mumax3-cqed-infos%j.o

#SBATCH -p rasmia_hopper

#SBATCH -N 1

#SBATCH --gres=gpu:1 # launch in 1-GPUs

#SBATCH --cpus-per-task=16 # 16 cores por GPU * 1 GPU * 1 nodo

module load mumax3-cqed/1.0

echo "GCC version: $(gcc --version)"

echo "GO version: $(go version)"

mumax3 test-script.mx3

echo "done!!"

The informative report will be shown in the output file provided by the job.

Submit with :

sbatch --account=your_project_ID test-mumax.sh

More info :

Namd

NAMD, recipient of a 2002 Gordon Bell Award, a 2012 Sidney Fernbach Award, and a 2020 Gordon Bell Prize, is a parallel molecular dynamics code designed for high-performance simulation of large biomolecular systems. Based on Charm++ parallel objects, NAMD scales to hundreds of cores for typical simulations and beyond 500,000 cores for the largest simulations. NAMD uses the popular molecular graphics program VMD for simulation setup and trajectory analysis, but is also file-compatible with AMBER, CHARMM, and X-PLOR. NAMD is distributed free of charge with source code.

Usage

Example script : testNamd.sh

#!/bin/bash

#SBATCH --job-name=alanin

#SBATCH --output=%x.o%j

#SBATCH --error=%x.e%j

#SBATCH --nodes=1

#SBATCH --ntasks-per-node=2

#SBATCH --mem=32G

#SBATCH --time=1-00:00:00

#SBATCH -p agustina_thin

#

module load namd/3.0b6

namd3 +p ${SLURM_TASKS_PER_NODE} alanin.conf > alanin.log

Example files can be found at : lib/replica/example/ in NAMD_3.0_Linux-x86_64-multicore.tar.gz

Submit with :

sbatch --account=your_project_ID testNamd.sh

More info :

- https://www.hpc.unipr.it/dokuwiki/doku.php?id=calcoloscientifico:userguide:namd

- https://www.ks.uiuc.edu/Research/namd/2.8b2/ug.pdf

NCCL Test

NCCL are the optimized primitives for inter-GPU communication. NCCL (pronounced "Nickel") is a stand-alone library of standard communication routines for GPUs, implementing all-reduce, all-gather, reduce, broadcast, reduce-scatter, as well as any send/receive based communication pattern. It has been optimized to achieve high bandwidth on platforms using PCIe, NVLink, NVswitch, as well as networking using InfiniBand Verbs or TCP/IP sockets. NCCL supports an arbitrary number of GPUs installed in a single node or across multiple nodes, and can be used in either single- or multi-process (e.g., MPI) applications.

These tests check both the performance and the correctness of NCCL operations.

Usage

Example script : test-nccl.sh

#!/bin/bash

#SBATCH -J nccl-tst # job name

#SBATCH -o nccl-test.o%j # output and error file name (%j expands to jobID)

#SBATCH -p rasmia_ada # queue L40S (partition)

#SBATCH -N 2 # total number of nodes

module load nccl-test/1.0

# Run 2 MPI processes in 2 GPUs in 2 Nodes

for i in $NCCLBUILD/*_perf; do

FILENAME=$(basename $i)

echo ""

echo "Running test $FILENAME..."

echo ""

mpirun -np 2 $NCCLBUILD/./$FILENAME -b 8 -e 8G -f 2 -g 2

done

echo "done!!"

The informative report will be shown un the job output file.

Submit with :

sbatch --account=your_project_ID test-nccl.sh

More info :

NBO/7.0

The Natural Bond Orbital (NBO) program NBO 7.0 is a discovery tool for chemical insights from complex wavefunctions. NBO 7.0 is the current version of the broad suite of 'natural' algorithms for optimally expressing numerical solutions of Schrödinger's wave equation in the chemically intuitive language of Lewis-like bonding patterns and associated resonance-type 'donor-acceptor' interactions.

Usage

Example script : testNBO7.sh

#!/bin/bash

#SBATCH -e nbo7_test%j.err

#SBATCH -o nbo7_test%j.msg

#SBATCH -p agustina_thin

#SBATCH -N 4

#SBATCH --ntasks-per-node=24

module load nbo/7

# lauch with gaunbo7

Submit with :

sbatch --account=your_project_ID testNBO7.sh

More info :

NVIDIA HPC SDK

A Comprehensive Suite of Compilers, Libraries and Tools for HPC. The NVIDIA HPC Software Development Kit (SDK) includes the proven compilers, libraries and software tools essential to maximizing developer productivity and the performance and portability of HPC applications.

IMPORTANT: If you make use of GPU queues, it is mandatory to select a minimum number of CPU cores given by 16 cores/GPU multiplied by the amount of nodes selected and the number of selected GPUs (16 cores/gpu * num. GPUs * num. nodes).

Usage

Example script : testNvidiaHpcSdk.sh

#!/bin/bash

#SBATCH -e nvidiahpcsdk_test%j.err

#SBATCH -o nvidiahpcsdk_test%j.msg

#SBATCH -p agustina_thin

#SBATCH -N 4

#SBATCH --ntasks-per-node=24

module load nvidia-hpc-sdk/24.5

nvcc --help

nvcc --version

Submit with :

sbatch --account=your_project_ID testNvidiaHpcSdk.sh

Example script (Running in ada queue) : sumParallel.sh

#!/bin/bash

#SBATCH -J multi-gpu-test

#SBATCH -e multigpu-test%j.err

#SBATCH -o multigpu-test%j.msg

#SBATCH -p rasmia_ada # queue (partition)

#SBATCH --nodes=1

#SBATCH --gres=gpu:2 # launch in 2-GPUs

#SBATCH --cpus-per-task=32 # 16 cores por GPU * 2 GPUs * 1 nodo

module load nvidia-hpc-sdk/24.5

echo "Loaded NVIDIA SDK !!!"

nvidia-smi

mkdir -p /fs/agustina/$(whoami)/test-multigpu

export CUDA_SUM_CODE=/fs/agustina/$(whoami)/test-multigpu

nvcc $CUDA_SUM_CODE/sum-array-multigpu.cu -o $CUDA_SUM_CODE/sum-array-multigpu

$CUDA_SUM_CODE/./sum-array-multigpu

Example script (Running in hopper queue) : sumParallel.sh

#!/bin/bash

#SBATCH -J multi-gpu-test

#SBATCH -e multigpu-test%j.err

#SBATCH -o multigpu-test%j.msg

#SBATCH -p rasmia_hopper # queue (partition)

#SBATCH --nodelist=agpuh02

#SBATCH --gres=gpu:4 # launch in 4-GPUs

#SBATCH --cpus-per-task=64 # 16 cores por GPU * 4 GPUs * 1 nodo

module load nvidia-hpc-sdk/24.5

echo "Loaded NVIDIA SDK !!!"

nvidia-smi

export CUDA_SUM_CODE=/fs/agustina/sergiomtzlosa/test-multigpu

nvcc $CUDA_SUM_CODE/sum-array-multigpu.cu -o $CUDA_SUM_CODE/sum-array-multigpu

$CUDA_SUM_CODE/./sum-array-multigpu

Execution in selected nodes: sumParallel.sh

#!/bin/env bash

#SBATCH -J multgpu-test # job name

#SBATCH -o multi-gpu.o%j # output and error file name (%j expands to jobID)

#SBATCH -p rasmia_hopper # H100 (partition)

#SBATCH --gres=gpu:4 # gpus per node

#SBATCH -–nodelist=agpuh[02-03] # two nodes (node 2 and 3)

#SBATCH --ntasks=2 # one task per node

#SBATCH --cpus-per-task=128 # 16 cores por GPU * 4 GPUs * 2 nodos

module load nvidia-hpc-sdk/24.5

export CUDA_SUM_CODE=/fs/agustina/$(whoami)/test-multigpu

nvcc $CUDA_SUM_CODE/sum-array-multigpu.cu -o $CUDA_SUM_CODE/sum-array-multigpu

mpirun $CUDA_SUM_CODE/./sum-array-multigpu

Submit with :

sbatch --account=your_project_ID sumParallel.sh

CUDA file : sum-array-multigpu.cu

#include "cuda_runtime.h"

#include "device_launch_parameters.h"

#include <stdio.h>

// for random initialize

#include <stdlib.h>

#include <time.h>

// for memeset

#include <cstring>

void printGpuInfo(int i) {

cudaDeviceProp prop;

cudaGetDeviceProperties(&prop, i);

printf("Device Number: %d\n", i);

printf(" Device name: %s\n", prop.name);

printf(" Memory Clock Rate (KHz): %d\n", prop.memoryClockRate);

printf(" Memory Bus Width (bits): %d\n", prop.memoryBusWidth);

printf(" Peak Memory Bandwidth (GB/s): %f\n\n", 2.0*prop.memoryClockRate*(prop.memoryBusWidth/8)/1.0e6);

}

void compare_arrays(int *a, int *b, int size) {

for (int i = 0; i < size; i++) {

if (a[i] != b[i]) {

printf("%d != %d\n", a[i], b[i]);

printf("Arrays are different!\n\n");

return;

}

}

printf("Arrays are the same!\n\n");

}

// CUDA Kernel

__global__ void sum_array_gpu(int *a, int *b, int *c, int size) {

int gid = blockIdx.x * blockDim.x + threadIdx.x;

if (gid < size) {

c[gid] = a[gid] + b[gid];

}

}

void sum_array_cpu(int *a, int *b, int *c, int size) {

for (int i = 0; i < size; i++) {

c[i] = a[i] + b[i];

}

}

int main() {

int size = 10000;

int block_size = 128;

int nDevices;

int NO_BYTES = size * sizeof(int);

// host pointers

int *h_a, *h_b, *gpu_results, *h_c;

h_a = (int *)malloc(NO_BYTES);

h_b = (int *)malloc(NO_BYTES);

h_c = (int *)malloc(NO_BYTES);

// initialize host pointer

time_t t;

srand((unsigned)time(&t));

for (int i = 0; i < size; i++) {

h_a[i] = (int)(rand() & 0xff);

}

for (int i = 0; i < size; i++) {

h_b[i] = (int)(rand() & 0xff);

}

sum_array_cpu(h_a, h_b, h_c, size);

cudaGetDeviceCount(&nDevices);

// device pointer

int *d_a, *d_b, *d_c;

for (int dev = 0; dev < nDevices; dev++) {

printGpuInfo(dev);

cudaSetDevice(dev);

gpu_results = (int *)malloc(NO_BYTES);

memset(gpu_results, 0 , NO_BYTES);

cudaMalloc((int **)&d_a, NO_BYTES);

cudaMalloc((int **)&d_b, NO_BYTES);

cudaMalloc((int **)&d_c, NO_BYTES);

cudaMemcpy(d_a, h_a, NO_BYTES, cudaMemcpyHostToDevice);

cudaMemcpy(d_b, h_b, NO_BYTES, cudaMemcpyHostToDevice);

// launching the grid

dim3 block(block_size);

dim3 grid((size/block.x) + 1);

sum_array_gpu<<<grid, block>>>(d_a, d_b, d_c, size);

cudaDeviceSynchronize();

cudaMemcpy(gpu_results, d_c, NO_BYTES, cudaMemcpyDeviceToHost);

// array comparison

compare_arrays(gpu_results, h_c, size);

cudaFree(d_a);

cudaFree(d_b);

cudaFree(d_c);

free(gpu_results);

}

free(h_a);

free(h_b);

}

For a multigpu and multinode launch, it is mandatory to use OpenMPI, here is the same bash script with multigpu support:

#SBATCH -J multi-gpu-test

#SBATCH -e multigpu-test%j.err

#SBATCH -o multigpu-test%j.msg

#SBATCH -p rasmia_ada # queue L40S (partition)

#SBATCH --gres=gpu:4 # gpus per node

#SBATCH --nodes=4 # four nodes

#SBATCH --ntasks=4 # one task per node

#SBATCH --cpus-per-task=256 # 16 cores por GPU * 4 GPUs * 4 nodos

echo "This bash script launches the program on 16 GPUs"

module load nvidia-hpc-sdk/24.5

echo "Loaded NVIDIA SDK !!!"

nvidia-smi

export CUDA_SUM_CODE=/fs/agustina/$(whoami)/test-multigpu

nvcc $CUDA_SUM_CODE/sum-array-multigpu.cu -o $CUDA_SUM_CODE/sum-array-multigpu

mpirun $CUDA_SUM_CODE/./sum-array-multigpu

Submit with :

sbatch --account=your_project_ID sumParallel.sh

OLLAMA execution in Agustina

To use OLLAMA in Agustina it is mandatory to obtain the OLLAMA binary file for Linux distributions:

Uncompress the ollama-linux-amd64.tgz file and create directories:

$ mkdir -p /fs/agustina/$(whoami)/test-ollama/prompts # prompts folder

$ mkdir -p /fs/agustina/$(whoami)/test-ollama/models-ollama # new folder to download OLLAMA models

$ cd /fs/agustina/$(whoami)/test-ollama

$ wget https://github.com/ollama/ollama/releases/download/v0.3.14/ollama-linux-amd64.tgz

$ tar xvf ollama-linux-amd64.tgz

OLLAMA uses the /home directory to store the models, indeed in $HOME/.ollama/models, but we change this path by setting the OLLAMA_MODELS environment variable.

Here is an example of running OLLAMA model in Agustina cluster on H100 GPUs:

bash file : test-ollama.sh

#!/bin/bash

#SBATCH -J ollama-gpu-test

#SBATCH -e ollama-test%j.err

#SBATCH -o ollama-test%j.msg

#SBATCH -p rasmia_hopper # H100 queue (partition)

#SBATCH --nodelist=agpuh02

#SBATCH --gres=gpu:4 # four GPUs

#SBATCH --cpus-per-task=64 # 16 cores por GPU * 4 GPUs * 1 nodo

module load nvidia-hpc-sdk/24.5

echo "Loaded NVIDIA SDK !!!"

module load python-math/3.11.4

python --version

nvidia-smi

echo "Current path: $(pwd)"

export BASE_OLLAMA_TEST=/fs/agustina/$(whoami)/test-ollama

export PROMPTS_PATH=$BASE_OLLAMA_TEST/prompts

export OLLAMA_BIN=$BASE_OLLAMA_TEST/bin

echo "OLLAMA PATH: $OLLAMA_BIN"

export OLLAMA_NUMPARALLEL=4

export OLLAMA_LOAD_TIMEOUT=900

# change the models download path with this environment variable

export OLLAMA_MODELS=$BASE_OLLAMA_TEST/models-ollama

$OLLAMA_BIN/./ollama serve &

$OLLAMA_BIN/./ollama list

for i in llama3.1:8b-instruct-q2_K llama3.1:8b-instruct-q8_0; do

touch answer1-$i.txt && >answer1-$i.txt

echo "" >> answer1-$i.txt

echo "PROMPT:" >> answer1-$i.txt

echo "" >> answer1-$i.txt

cat $PROMPTS_PATH/prompt1.txt >> answer1-$i.txt

echo "" >> answer1-$i.txt

echo "ANSWER:" >> answer1-$i.txt

echo "" >> answer1-$i.txt

echo $(< $PROMPTS_PATH/prompt1.txt) | $OLLAMA_BIN/./ollama run $i >> answer1-$i.txt

echo "" >> answer1-$i.txt

echo "--------------------------------" >> answer1-$i.txt

echo "" >> answer1-$i.txt

echo "PROMPT:" >> answer1-$i.txt

echo "" >> answer1-$i.txt

cat $PROMPTS_PATH/prompt2.txt >> answer1-$i.txt

echo "" >> answer1-$i.txt

echo "ANSWER:" >> answer1-$i.txt

echo "" >> answer1-$i.txt

echo $(< $PROMPTS_PATH/prompt2.txt) | $OLLAMA_BIN/./ollama run $i >> answer1-$i.txt

done

$OLLAMA_BIN/./ollama list

echo "DONE!"

The script takes the input prompts from files $PROMPTS_PATH/prompt1.txt and $PROMPTS_PATH/prompt2.txt.

Submit with :

sbatch --account=your_project_ID test-ollama.sh

More info :

Ollama

Get up and running with large language models. Ollama is a client to use AI models. It is also possible to use DeepSeek models.

Usage

This example uses some ollama models and DeepSeek model. The scripts creates two prompts in spanish and download the models to set the prompts, after that the answers are saved in separeted files.

Available ollama versions:

- ollama 0.3.14

- ollama 0.5.7

Example script : test-ollama-hpc.sh

#!/bin/bash

#SBATCH -J ollama-gpu-test

#SBATCH -e ollama-test%j.err

#SBATCH -o ollama-test%j.msg

#SBATCH -p rasmia_hopper # queue (partition)

#SBATCH --nodelist=agpuh02

#SBATCH --gres=gpu:4

# load ollama

#module load ollama/0.3.14

module load ollama/0.5.7

python --version

nvidia-smi

echo "Current path: $(pwd)"

# export variables

export BASE_OLLAMA_TEST=/fs/agustina/$(whoami)/test-ollama

export PROMPTS_PATH=$BASE_OLLAMA_TEST/prompts

export OLLAMA_BIN=$OLLAMA_ROOT/bin

echo "OLLAMA PATH: $OLLAMA_BIN"

export OLLAMA_NUMPARALLEL=4

export OLLAMA_LOAD_TIMEOUT=900

export OLLAMA_MODELS=$BASE_OLLAMA_TEST/models-ollama

# create folders for models and prompts

mkdir -p $PROMPTS_PATH

mkdir -p $OLLAMA_MODELS

# prompts creation

echo "Dime a que instituto de UNIZAR corresponden las siglas BiFi. Describe su investigacion reciente." > $PROMPTS_PATH/prompt1.txt

echo "cual es el camino mas interesante de sevilla a barcelona pasando por madrid?" > $PROMPTS_PATH/prompt2.txt

# start ollama

$OLLAMA_BIN/./ollama serve &

$OLLAMA_BIN/./ollama list

# 1 - Download models (also DeepSeek)

# 2 - Pass the prompts to the models

# 3 - Get the answers and save them to a file

for i in llama3.1:8b-instruct-q2_K \

llama3.1:8b-instruct-q8_0 \

deepseek-r1:7b; do

touch answer1-$i.txt && >answer1-$i.txt

echo "" >> answer1-$i.txt

echo "PROMPT:" >> answer1-$i.txt

echo "" >> answer1-$i.txt

cat $PROMPTS_PATH/prompt1.txt >> answer1-$i.txt

echo "" >> answer1-$i.txt

echo "ANSWER:" >> answer1-$i.txt

echo "" >> answer1-$i.txt

echo $(< $PROMPTS_PATH/prompt1.txt) | $OLLAMA_BIN/./ollama run $i >> answer1-$i.txt

echo "" >> answer1-$i.txt

echo "--------------------------------" >> answer1-$i.txt

echo "" >> answer1-$i.txt

echo "PROMPT:" >> answer1-$i.txt

echo "" >> answer1-$i.txt

cat $PROMPTS_PATH/prompt2.txt >> answer1-$i.txt

echo "" >> answer1-$i.txt

echo "ANSWER:" >> answer1-$i.txt

echo "" >> answer1-$i.txt

echo $(< $PROMPTS_PATH/prompt2.txt) | $OLLAMA_BIN/./ollama run $i >> answer1-$i.txt

done

$OLLAMA_BIN/./ollama list

echo "DONE!"

Submit with :

sbatch --account=your_project_ID test-ollama-hpc.sh

It is also possible to use other DeepSeek models like the one to create programming code like the Deepseek-v2:16b. We can request a Brainfuck programming language interpreter made in Python:

Example script : test-deepseek-hpc.sh

#!/bin/bash

#SBATCH -J deepseek-gpu-test

#SBATCH -e deepseek-test%j.err

#SBATCH -o deepseek-test%j.msg

#SBATCH -p rasmia_hopper # queue (partition)

#SBATCH --nodelist=agpuh02

#SBATCH --gres=gpu:1

# load ollama

module load ollama/0.5.7

export BASE_OLLAMA_TEST=/fs/agustina/$(whoami)/deepseek-test

export ANSWERS_PATH=$BASE_OLLAMA_TEST/answers

export OLLAMA_BIN=$OLLAMA_ROOT/bin

echo "OLLAMA PATH: $OLLAMA_BIN"

export OLLAMA_NUMPARALLEL=1

export OLLAMA_LOAD_TIMEOUT=900

export OLLAMA_MODELS=$BASE_OLLAMA_TEST/models

mkdir -p $OLLAMA_MODELS

mkdir -p $ANSWERS_PATH

$OLLAMA_BIN/./ollama serve &

PROMPT="create a brainfuck interpreter made in python"

touch $ANSWERS_PATH/answer.txt

truncate -s 0 $ANSWERS_PATH/answer.txt

echo $PROMPT | $OLLAMA_BIN/./ollama run deepseek-coder-v2:16b >> $ANSWERS_PATH/answer.txt

Submit with :

sbatch --account=your_project_ID test-deepseek-hpc.sh

DeepSeek-v2:16b response:

Creating a Brainfuck interpreter in Python is an interesting exercise. Below, I'll provide a simple implementation of a Brainfuck interpreter

that can execute Brainfuck commands. This interpreter will handle the basic set of commands defined by Brainfuck, including moving pointers,

modifying values at the pointer location, and looping based on the value at the current cell.

Here's a basic implementation:

def brain_fuck(code):

tape = [0] * 30000 # Initialize a tape of 30,000 cells with all values set to 0

pointer = 0 # The current cell of the tape being pointed by the interpreter

code_pointer = 0 # Position in the Brainfuck code string

brackets_stack = [] # Stack for keeping track of bracket positions

while code_pointer < len(code):

command = code[code_pointer]

if command == '>':

pointer += 1

if pointer >= len(tape):

raise IndexError("Tape pointer out of bounds.")

elif command == '<':

pointer -= 1

if pointer < 0:

raise IndexError("Tape pointer out of bounds.")

elif command == '+':

tape[pointer] += 1

if tape[pointer] > 255:

tape[pointer] = 0

elif command == '-':

tape[pointer] -= 1

if tape[pointer] < 0:

tape[pointer] = 255

elif command == '.':

print(chr(tape[pointer]), end='')

elif command == ',':

tape[pointer] = ord(input()[0]) if input() else 0

elif command == '[':

if tape[pointer] == 0:

bracket_nesting = 1

while bracket_nesting > 0:

code_pointer += 1

if code[code_pointer] == '[':

bracket_nesting += 1

elif code[code_pointer] == ']':

bracket_nesting -= 1

else:

brackets_stack.append(code_pointer)

elif command == ']':

if tape[pointer] != 0:

code_pointer = brackets_stack[-1]

else:

brackets_stack.pop()

code_pointer += 1

# Example usage:

brain_fuck("++++++++++[>+>+++>+++++++>++++++++++<<<<-]>>>++.>+.+++++++..+++.<<++.>+++++++++++++++.>.+++.------.--------.")

This implementation covers the basic commands of Brainfuck and handles input/output as specified in the standard Brainfuck language.

It uses a simple stack to handle loops, jumping over the code when the condition is not met. The tape size is fixed at 30,000 cells,

which can be adjusted based on requirements.

Keep in mind that this implementation does not include error handling for malformed Brainfuck code or

runtime errors (like accessing out of bounds memory). You might want to add checks and exceptions to make the interpreter more

robust and user-friendly.

More info :

OpenBabel

OpenBabel is a project to facilitate the interconversion of chemical data from one format to another – including file formats of various types. This is important for the following reasons:

- Multiple programs are often required in realistic workflows. These may include databases, modeling or computational programs, visualization programs, etc.

- Many programs have individual data formats, and/or support only a small subset of other file types.

- Chemical representations often vary considerably:

- Some programs are 2D. Some are 3D. Some use fractional k-space coordinates.

- Some programs use bonds and atoms of discrete types. Others use only atoms and electrons.

- Some programs use symmetric representations. Others do not.

- Some programs specify all atoms. Others use "residues" or omit hydrogen atoms.

- Individual implementations of even standardized file formats are often buggy, incomplete or do not completely match published standards.

As a free, and open source project, OpenBabel improves by way of helping others. It gains by way of its users, contributors, developers, related projects, and the general chemical community. We must continually strive to support these constituencies.

Usage

Example script : testOpenbabel.sh

#!/bin/bash

#SBATCH -e openbabel_test%j.err

#SBATCH -o openbabel_test%j.msg

#SBATCH -p agustina_thin

#SBATCH -N 4

#SBATCH --ntasks-per-node=24

module load openbabel/3.1.1

obabel --help

Submit with :

sbatch --account=your_project_ID testOpenbabel.sh

More info :

OpenFOAM

OpenFOAM is the free, open source CFD software developed primarily by OpenCFD Ltd since 2004. It has a large user base across most areas of engineering and science, from both commercial and academic organisations. OpenFOAM has an extensive range of features to solve anything from complex fluid flows involving chemical reactions, turbulence and heat transfer, to acoustics, solid mechanics and electromagnetics. The availbale version is OpenFOAM v2206.

Usage

Example script : test-openfoam.sh

#!/bin/bash

#SBATCH --job-name=test-of

#SBATCH -o test-of_out%j.out

#SBATCH -e test-of_err%j.err

#SBATCH -N 1

#SBATCH -p agustina_thin

module load openfoam/2206

echo "OPENFOAM BIN:"

of_exec "ls -al $OPENFOAM_BIN"

echo "OpenFOAM v2206 HOME:"

of_exec "ls -al $OPENFOAM_HOME"

# base path for custom libraries and models

BASE_COMPILE=/fs/agustina/$(whoami)/base_data_of

BASE_COMPILE_LIB=$BASE_COMPILE/libraries

BASE_COMPILE_SOLV=$BASE_COMPILE/solvers

of_exec "cd $BASE_COMPILE_LIB/myNewLibrary && wclean && wmake"

of_exec "cd $BASE_COMPILE_SOLV/myNewModel && wclean && wmake"

of_exec "mpirun -n 4 myNewModel -parallel"

echo "done!"

Submit with :

sbatch --account=your_project_ID test-openfoam.sh

More info :

OpenMX

OpenMX (Open source package for Material eXplorer) is a software package for nano-scale material simulations based on density functional theories (DFT) [1], norm-conserving pseudopotentials [32,33,34,35,36], and pseudo-atomic localized basis functions [41]. The methods and algorithms used in OpenMX and their implementation are carefully designed for the realization of large-scale ab initio electronic structure calculations on parallel computers based on the MPI or MPI/OpenMP hybrid parallelism. The efficient implementation of DFT enables us to investigate electronic, magnetic, and geometrical structures of a wide variety of materials such as bulk materials, surfaces, interfaces, liquids, and low-dimensional materials. Systems consisting of 1000 atoms can be treated using the conventional diagonalization method if several hundreds cores on a parallel computer are used. Even ab initio electronic structure calculations for systems consisting of more than 10000 atoms are possible with the O( ) methods implemented in OpenMX if several thousands CPU cores on a parallel computer are available. Since optimized pseudopotentials and basis functions, which are well tested, are provided for many elements, users may be able to quickly start own calculations without preparing those data by themselves. Considerable functionalities have been implemented for calculations of physical properties such as magnetic, dielectric, and electric transport properties. Thus, it is expected that OpenMX can be a useful and powerful theoretical tool for nano-scale material sciences, leading to better and deeper understanding of complicated and useful materials based on quantum mechanics. The development of OpenMX has been initiated by the Ozaki group in 2000, and from then onward many developers listed in the top page of the manual have contributed for further development of the open source package. The distribution of the program package and the source codes follow the practice of the GNU General Public License version 3 (GPLv3) [102], and they are downloadable from http://www.openmx-square.org/

) methods implemented in OpenMX if several thousands CPU cores on a parallel computer are available. Since optimized pseudopotentials and basis functions, which are well tested, are provided for many elements, users may be able to quickly start own calculations without preparing those data by themselves. Considerable functionalities have been implemented for calculations of physical properties such as magnetic, dielectric, and electric transport properties. Thus, it is expected that OpenMX can be a useful and powerful theoretical tool for nano-scale material sciences, leading to better and deeper understanding of complicated and useful materials based on quantum mechanics. The development of OpenMX has been initiated by the Ozaki group in 2000, and from then onward many developers listed in the top page of the manual have contributed for further development of the open source package. The distribution of the program package and the source codes follow the practice of the GNU General Public License version 3 (GPLv3) [102], and they are downloadable from http://www.openmx-square.org/

Usage

Example script : testOpenmx.sh

#!/bin/bash

#SBATCH --job-name=openMx

#SBATCH --output=openMx.o%j

#SBATCH --error=openMx.e%j

#SBATCH --nodes=1

#SBATCH --ntasks-per-node=2

#SBATCH --mem=32G

#SBATCH --time=1-00:00:00

#SBATCH -p agustina_thin

module load openmpi/4.1.5-gcc-12.2.0-vo6j57n

module load openmx/3.9-gcc-12.2.0-yewsx2z

mpirun -np 4 openmx -runtest -nt 1

cp runtest.result runtest.result.4.1

mpirun -np 2 openmx -runtest -nt 2

cp runtest.result runtest.result.2.2

mpirun -np 1 openmx -runtest -nt 4

cp runtest.result runtest.result.1.4

Executed in openmx3.9/work directory inside openmx source files.

Submit with :

sbatch --account=your_project_ID testOpenmx.sh

More info :

Usage

Example script : testOrca.sh

#!/bin/bash

#

#SBATCH -J testOrca # job name

#SBATCH -o testOrca.o%j # output and error file name (%j expands to jobID)

#SBATCH -e testOrca.e%j # output and error file name (%j expands to jobID)

#SBATCH -n 2

module load orca/5.0.4

orca water.inp

Example file : water.inp

!HF DEF2-SVP

* xyz 0 1

O 0.0000 0.0000 0.0626

H -0.7920 0.0000 -0.4973

H 0.7920 0.0000 -0.4973

*

Example script : testOrca1.sh

#!/bin/bash

#SBATCH --job-name=orca_thin_job # Nombre del trabajo

#SBATCH --output=orca_thin_job.o%j # Archivo de salida

#SBATCH --error=orca_thin_job.e%j # Archivo de error

#SBATCH -p agustina_thin # Partición 'agustina_thin'

#SBATCH --nodes=2

#SBATCH --account=proyecto_prueba # Cuenta del proyecto

#SBATCH --mincpus=48

#SBATCH --ntasks=12

#SBATCH --cpus-per-task=4

export ORCADIR=/opt/ohpc/pub/apps/orca/orca_5_0_4_linux_x86-64_shared_openmpi411

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/opt/ohpc/pub/libs/hwloc/lib

module load openmpi/4.1.2-gcc-12.2.0-fosm5wz

module load orca/5.0.4

$ORCADIR/orca input.inp > output.out

Example file : input.inp

! B3LYP def2-SVP TightSCF Opt # Cambia según tu método y requerimientos

%pal

nprocs 12 # Usar 48 núcleos en total (24 por nodo x 3 nodos)

end

* xyz 0 1 # Geometría del sistema (esto es solo un ejemplo)

C 0.000000 0.000000 0.000000

H 0.000000 0.000000 1.089000

H 1.026719 0.000000 -0.363000

H -0.513360 -0.889165 -0.363000

H -0.513360 0.889165 -0.363000

*

Submit with :

sbatch --account=your_project_ID Orca_script_name.sh

More info : https://www.faccts.de/orca/

Pennylane

The definitive open-source Python framework for quantum programming. Built by researchers, for research. Suitable for Quantum Machine Learning.

This framework can run on CPU and GPU using the built in devices. The following example shows the Pennylane framework with GPU device.

IMPORTANT: If you make use of GPU queues, it is mandatory to select a minimum number of CPU cores given by 16 cores/GPU multiplied by the amount of nodes selected and the number of selected GPUs (16 cores/gpu * num. GPUs * num. nodes).

Usage

Example script : testPennylaneGPU.sh

#!/bin/bash

#SBATCH -e pennylanegpu-errors%j.err

#SBATCH -o pennylanegpu-infos%j.msg

#SBATCH -p rasmia_hopper # H100 queue

#SBATCH -N 2

#SBATCH --gres=gpu:1 # launch in 1-GPUs

#SBATCH --cpus-per-task=32 # 16 cores por GPU * 1 GPU * 2 nodos

module load nvidia-hpc-sdk/24.5

module load pennylane/0.33.1

nvcc --version

python test-pennylanegpu.py

Submit with :

sbatch --account=your_project_ID testPennylaneGPU.sh

Example script : test-pennylanegpu.py

#!/usr/bin/python

# General libraries

from scipy.ndimage import gaussian_filter

import warnings

# Pennylane libraries

import pennylane as qml

from pennylane import numpy as np

warnings.filterwarnings("ignore")

# Minimize the energy of this 4-qubit Hamiltonian given in Pauli operators

# H = 1.0 * XXII + 0.3 * ZIII + 1.0 * IXXI + 0.3 * IZII + 1.0 * IIXX + 0.3 * IIZI + 1.0 * XIIX + 0.3 * IIIZ

def ising_chain_ham(n, gam, pennylane = False):

# This function build the hamiltonian with Pauli operators

# n = number of spin positions

# gam = transverse field parameter

if pennylane:

import pennylane as qml

from pennylane import numpy as np

from qiskit.opflow import X, Z, I

for i in range(n):

vecX = [I] * n

vecZ = [I] * n

vecX[i] = X

vecZ[i] = Z

if i == n - 1:

vecX[0] = X

else:

vecX[i+1] = X

auxX = vecX[0]

auxZ = vecZ[0]

for a in vecX[1:n]:

auxX = auxX ^ a

for b in vecZ[1:n]:

auxZ = auxZ ^ b

if i == 0:

H = (auxX) + (gam * auxZ)

else:

H = H + (auxX) + (gam * auxZ)

if pennylane:

h_matrix = np.matrix(H.to_matrix_op().primitive.data)

return qml.pauli.pauli_decompose(h_matrix)

return H

# Hamiltonian definition

n = 4 # número de qubits

gam = 0.3

H = ising_chain_ham(n, gam, pennylane = True) # Creamos el Hamiltoniano

print("Hamiltoniano in Pauli operators:")

print("--------------------------------------------------\n")

print(H)

# choose device GPU or CPU

dev = qml.device("lightning.gpu", wires = n) # pennylane GPU simulator device

#dev = qml.device("lightning.qubit", wires = n) # pennylane CPU simulator device

init_param = (

np.array(np.random.random(n), requires_grad=True),

np.array(1.1, requires_grad=True),

np.array(np.random.random(n), requires_grad=True),

)

rot_weights = np.ones(n)

crot_weights = np.ones(n)

nums_frequency = {

"rot_param": {(0,): 1, (1,): 1, (2,): 1., (3,): 1.}, # parámetros iniciales para las rotaciones de puertas

"layer_par": {(): n},

"crot_param": {(0,): 2, (1,): 2, (2,): 2, (3,): 2},

}

@qml.qnode(dev)

def ansatz(rot_param, layer_par, crot_param, rot_weights = None, crot_weights = None):

# Ansatz

for i, par in enumerate(rot_param * rot_weights):

qml.RY(par, wires = i)

for _ in list(range(len(dev.wires))):

qml.CNOT(wires = [0, 1])

qml.CNOT(wires = [0, 2])

qml.CNOT(wires = [1, 2])

qml.CNOT(wires = [0, 3])

qml.CNOT(wires = [1, 3])

qml.CNOT(wires = [2, 3])

# Measure of expected value for hamiltonian

return qml.expval(H)

max_iterations = 500 # max. iterations for optimizer

# We use the Rotosolve optimizer built-in Pennylane

opt = qml.RotosolveOptimizer(substep_optimizer = "brute", substep_kwargs = {"num_steps": 4})

param = init_param

rot_weights = np.array([0.4, 0.8, 1.0, 1.2], requires_grad=False)

crot_weights = np.array([0.5, 1.0, 1.5, 1.8], requires_grad=False)

cost_rotosolve = []

for n in range(max_iterations):

param, cost, prev_energy = opt.step_and_cost(

ansatz,

*param,

nums_frequency=nums_frequency,

spectra = [],

full_output=True,

rot_weights=rot_weights,

crot_weights=crot_weights,

)

# Compute energy

energy = ansatz(*param, rot_weights=rot_weights, crot_weights=crot_weights)

# Calculate difference between new and old energies

conv = np.abs(energy - prev_energy)